Do you all ever think about infinity? It’s one of those things unique to human cognition and thus sort of unavoidable, like object permanence or episodic foresight. We are forced to confront the infinite as a matter of course. Yet to ponder the infinite is paradoxical, for thinking of it limits the limitless.

The concept of infinity comes out of mathematics of course, but I think it transcends the disciplinary borders typically ascribed to it. Infinity has more in common with faith and religion than math. The infinite and God both contain similar qualities of omnipotence, and like the ontological argument for the existence of God, if we can conceive of infinity in our minds–a watered down version–then it must exist in a purer form elsewhere. To imagine the infinite implies that an even more infinite infinity exists outside of us.

Outside of math class, infinity is historically contingent, repackaged by different cultures to reflect the specific historical conditions surrounding them. In Ancient Greece, when thinkers were just beginning to grapple with the concept, infinity was first inferred from the banal. The infinite presented itself in such mundane tasks as walking from point A to B, a distance with infinite halfway points between, or in the shapeless water of a river, infinitely turning in on itself so that one can “never step in the same river twice.”

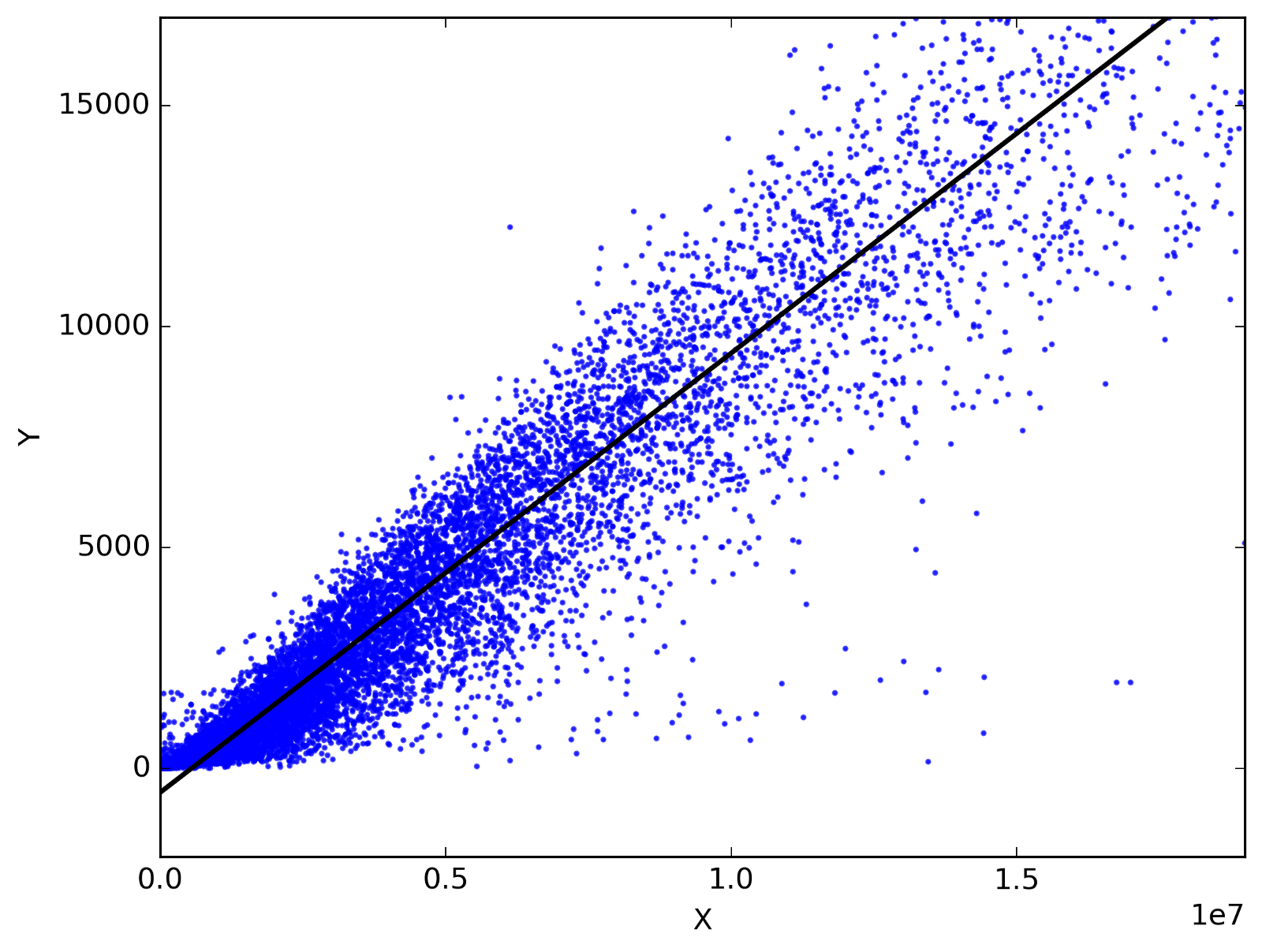

For us today, the infinite reveals itself in myriad other ways, consumer choices chief among them. I often find myself mindlessly scrolling through Netflix’s seemingly infinite offerings, squandering two hours of my day. I am similarly paralyzed by the infinite consumer choices aggregated on Amazon or on display at grocery stores, so much so I often don’t purchase whatever it was I needed in the first place. I also experience a flavor of the infinite when I think about voting. While election votes are not technically infinite, there are enough of them relative to the one of mine that political outcomes feel infinitely distant and detached from my singular blue vote cast in a red state, a snowflake in an avalanche as a popular analogy goes.

While there are important mathematical operations involving infinity–I mean, it’s a crucial part of calculus, after all–I think infinity has more impact conceptually than arithmetically. The infinite is, in other words, more rhetorical than numerical. Like a drinking glass, it gives shape to the aqueous nature of existing in an infinitely expanding and timeless universe; it names a sensation commonly felt among individuals living infinitely singular lives in a godless world of billions. Many abstractions short circuit our cognitive console, but naming them cools us down. We can’t really experience the purely infinite, but we can name it. We can know it’s out there.

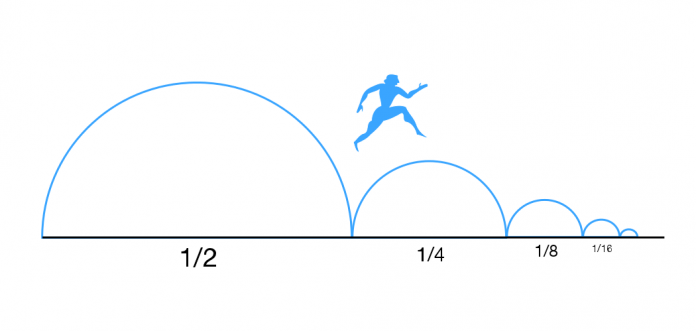

Knowing something exists is a prerequisite for ignoring it. And maybe it’s time to start ignoring the infinite. Calculus has taught us many things, but perhaps most importantly it resolves part of infinity’s paradoxical nature. We now know that the infinite is only one side of the equation. You can divide a number in half infinitely, but add all those divisions up and you get back to the whole number you started with. In our culture and our politics and our lives, we are too fixated on one side of the equation—the side with infinite divisions. The other side remains a self contained unit, a finite value that the infinitesimally small divisions must add up to, even if there are an infinite number of them. I think we all stand to benefit from a greater focus on the whole rather than the infinitely divided parts.